安装K8S

#1. 所有节点关闭 SELinux

setenforce 0

sed -i --follow-symlinks 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/sysconfig/selinux

#2. 所有节点确保防火墙关闭

systemctl stop firewalld

systemctl disable firewalld

#3. 添加 k8s 安装源

cat <<EOF > kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

mv kubernetes.repo /etc/yum.repos.d/

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# yum -y install yum-utils

#4. 所有节点 安装所需组件

yum install -y kubelet-1.22.4 kubectl-1.22.4 kubeadm-1.22.4 docker-ce

systemctl enable kubelet

systemctl start kubelet

systemctl enable docker

systemctl start docker

#5. 所有节点 kubernetes 官方推荐 docker 等使用 systemd 作为 cgroupdriver,否则 kubelet 启动不了

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://4puehki1.mirror.aliyuncs.com"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

#6. 初始化集群(仅在主节点跑)

# 初始化集群控制台 Control plane

# 失败了可以用 kubeadm reset 重置

kubeadm init --image-repository=registry.aliyuncs.com/google_containers

kubeadm init \

--apiserver-advertise-address=192.168.100.110 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.22.4 \

--service-cidr=10.96.0.0/16 \

--pod-network-cidr=10.244.0.0/16

### !!!WARNING!!! ###

# 如果出现以下内容报错

#[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-#errors=...`

#To see the stack trace of this error execute with --v=5 or higher ###

# 重新初始化

kubeadm reset # 先重置

echo "1" >/proc/sys/net/bridge/bridge-nf-call-iptables

systemctl restart kubelet

# 还是不行就修改 /etc/docker/daemon.json

{

"registry-mirrors": ["https://4puehki1.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

# 记得把 kubeadm join xxx 保存起来

# 忘记了重新获取:kubeadm token create --print-join-command

#7. 复制授权文件,以便 kubectl 可以有权限访问集群

# 如果你其他节点需要访问集群,需要从主节点复制这个文件过去其他节点

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

# 在其他机器上创建 ~/.kube/config 文件也能通过 kubectl 访问到集群

#8. 把工作节点加入集群(只在工作节点跑)

kubeadm join 172.16.32.10:6443 --token xxx --discovery-token-ca-cert-hash xxx

#9. 很有可能国内网络访问不到这个资源,你可以网上找找国内的源安装 flannel

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

# kubectl delete -f xxxx.yaml

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

NFS安装

1.nfs 安装

yum install -y nfs-utils

2.创建共享目录文件夹

mkdir /share

chmod 777 /share

3.修改配置文件传输规则

vi /etc/exports

/share *(rw,sync,no_root_squash)

4.开启nfs和rpcbind服务

重启服务

systemctl restart rpcbind

systemctl restart nfs-server

systemctl enable rpcbind

systemctl enable nfs-server

5.检查 挂载

showmount -e localhost

6.查询NFS的状态

查询服务状态 systemctl status nfs

停止服务 systemctl stop nfs

开启服务 systemctl start nfs

重启服务 systemctl restrart nfs

3.NFS客户端安装如果你运行

kubectl describe pod/pod-name发现 Events 中有下面这个错误networkPlugin cni failed to set up pod "test-k8s-68bb74d654-mc6b9_default" network: open /run/flannel/subnet.env: no such file or directory在每个节点创建文件

/run/flannel/subnet.env写入以下内容,配置后等待一会就好了FLANNEL_NETWORK=10.244.0.0/16 FLANNEL_SUBNET=10.244.0.1/24 FLANNEL_MTU=1450 FLANNEL_IPMASQ=true

K8S配置

StorageClass

NFS

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-sc

provisioner: sudo.com/nfs

parameters:

server: 192.168.100.110

path: /data/share

readOnly: "false"Local

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: standard

provisioner: kubernetes.io/aws-ebs

parameters:

type: gp2

reclaimPolicy: Retain

allowVolumeExpansion: true

mountOptions:

- debug

volumeBindingMode: Immediate

---

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: local-path

provisioner: kubernetes.io/aws-ebs

reclaimPolicy: Delete

volumeBindingMode: WaitForFirstConsumer

# 设置为默认sc

kubectl patch storageclass standard -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'PV&PVC

NFS:

apiVersion: v1

kind: PersistentVolume

metadata:

name: nginx-conf-pv

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteMany

storageClassName: nfs-sc

nfs:

path: /data/share/nginx/nginx-conf

server: 192.168.100.110

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nginx-conf-pvc

namespace: default

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: nfs-scLocal

apiVersion: v1

kind: PersistentVolume

metadata:

name: nginx-conf-pv

namespace: default

spec:

capacity:

storage: 1Gi

hostPath:

path: >-

/data/nginx/nginx-conf

type: DirectoryOrCreate

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Delete

storageClassName: local-path

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nginx-conf

namespace: default

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

volumeName: nginx-conf-pvPod

直接NFS

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

namespace: default

labels:

app: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

volumes:

- name: nfs-conf

nfs:

server: 192.168.100.110

path: /data/share/nginx/nginx-conf

- name: nfs-data

nfs:

server: 192.168.100.110

path: /data/share/nginx/nginx-data

containers:

- name: nginx

image: 'nginx:1.23.1'

ports:

- name: tcp-80

containerPort: 80

protocol: TCP

resources:

limits:

cpu: '1'

volumeMounts:

- name: nfs-conf

mountPath: /etc/nginx

- name: nfs-data

mountPath: /web

imagePullPolicy: IfNotPresent

restartPolicy: Always

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

namespace: default

labels:

app: nginx

spec:

ports:

- name: http-nginx

protocol: TCP

port: 80

targetPort: 80

nodePort: 30679

selector:

app: nginx

type: NodePortLocal

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

namespace: default

labels:

app: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

volumes:

- name: volume-nginx-conf

persistentVolumeClaim:

claimName: nginx-conf

- name: volume-nginx-data

persistentVolumeClaim:

claimName: nginx-data

containers:

- name: nginx

image: 'nginx:1.23.1'

ports:

- name: tcp-80

containerPort: 80

protocol: TCP

resources:

limits:

cpu: '1'

volumeMounts:

- name: volume-nginx-conf

mountPath: /etc/nginx

- name: volume-nginx-data

mountPath: /web

imagePullPolicy: IfNotPresent

restartPolicy: Always

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

namespace: default

labels:

app: nginx

spec:

ports:

- name: http-nginx

protocol: TCP

port: 80

targetPort: 80

nodePort: 30679

selector:

app: nginx

type: NodePortK8S命令

#查看所有pod

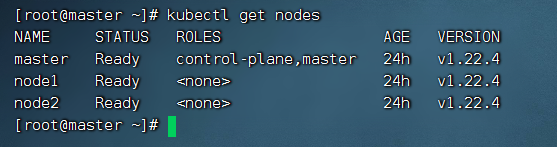

kubectl get pod

#查看所有node

kubectl get node

#查看wangzi 这个pod

kubectl get pod wangzi

#查看王子pod以json/yaml形式展示数据

kubectl get pod wangzi -o json

kubectl get pod wangzi -o yaml

#查看王子pod的详细信息

kubectl get pod wangzi -o wide

#描述资源信息(如果当前pod未启动成功,pullimageerror等就可以使用,查看报错信息)

kubectl describe pod wangzi

# 查看service详情

kubectl describe svc nginx-sv

# 查看service并以yaml格式输出

kubectl get svc/nginx-sv -o yaml

# 强制删除Pod

--grace-period=0 --force

# 修改deployment文件内容

kubectl edit deployment/nginx -o yaml --save-config

kubectl patch deployments.apps -n ssx ssx-mysql-dm -p '{"spec":{"replicas":1}}'

kubectl scale --replicas=2 deployment nginx

# 将Pod中的文件复制到本地

kubectl cp -n tk0009-dev develop-tk0009-85c56d789-rr88p:/app.jar ./tk0009.jarK8S可视化

Kuboard【集群数超过3收费】

#docker 安装

docker run -d \

--restart=unless-stopped \

--name=kuboard \

-p 80:80/tcp \

-p 10081:10081/udp \

-p 10081:10081/tcp \

-e KUBOARD_ENDPOINT="http://192.168.100.110:80" \

-e KUBOARD_AGENT_SERVER_UDP_PORT="10081" \

-e KUBOARD_AGENT_SERVER_TCP_PORT="10081" \

-v /root/kuboard-data:/data \

eipwork/kuboard:v3.5.0.3# K8S安装

kubectl apply -f https://addons.kuboard.cn/kuboard/kuboard-v3.yaml

# 您也可以使用下面的指令,唯一的区别是,该指令使用华为云的镜像仓库替代 docker hub 分发 Kuboard 所需要的镜像

# kubectl apply -f https://addons.kuboard.cn/kuboard/kuboard-v3-swr.yaml

# 执行指令,等待 kuboard 名称空间中所有的 Pod 就绪,如下所示,

watch kubectl get pods -n kuboard

# 执行 Kuboard v3 的卸载

kubectl delete -f https://addons.kuboard.cn/kuboard/kuboard-v3.yaml

# 在 master 节点以及带有 k8s.kuboard.cn/role=etcd 标签的节点上执行

rm -rf /usr/share/kuboardKubeSphere【OPEN SOURCE && FOR FREE】

# 装在master上至少2核3G 不然会有各种bug

# 这个好像只有calico网络组件能用

kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.3.1/kubesphere-installer.yaml

kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.3.1/cluster-configuration.yaml

#1. 执行创建nfs的yaml文件信息

kubectl apply -f nfs-rbac.yaml

#2. 创建storageclass(master节点操作)

kubectl apply -f storageclass-nfs.yaml

#3. 修改默认的驱动

kubectl patch storageclass storage-nfs -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

#4. 安装metrics-server

kubectl apply -f metrics-server.yaml

kubectl top nodes

#5. 正式安装KubeSphere

kubectl apply -f kubesphere-installer.yaml

kubectl apply -f cluster-configuration.yaml

-- 这个过程需要大约20min

#6. 检查安装日志

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l 'app in (ks-install, ks-installer)' -o jsonpath='{.items[0].metadata.name}') -f

#7. 检查控制台的端口

kubectl get svc/ks-console -n kubesphere-system

# admin

# P@88w0rd

systemctl restart kubelet

kubectl apply -f https://raw.githubusercontent.com/kubesphere/notification-manager/master/config/bundle.yaml nfs-rbac.yaml

--- apiVersion: v1 kind: ServiceAccount metadata: name: nfs-provisioner --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: nfs-provisioner-runner rules: - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["watch", "create", "update", "patch"] - apiGroups: [""] resources: ["services", "endpoints"] verbs: ["get","create","list", "watch","update"] - apiGroups: ["extensions"] resources: ["podsecuritypolicies"] resourceNames: ["nfs-provisioner"] verbs: ["use"] --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: run-nfs-provisioner subjects: - kind: ServiceAccount name: nfs-provisioner namespace: default roleRef: kind: ClusterRole name: nfs-provisioner-runner apiGroup: rbac.authorization.k8s.io --- kind: Deployment apiVersion: apps/v1 metadata: name: nfs-client-provisioner spec: replicas: 1 strategy: type: Recreate selector: matchLabels: app: nfs-client-provisioner template: metadata: labels: app: nfs-client-provisioner spec: serviceAccount: nfs-provisioner containers: - name: nfs-client-provisioner image: lizhenliang/nfs-client-provisioner volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes env: - name: PROVISIONER_NAME value: storage.pri/nfs - name: NFS_SERVER value: 192.168.100.23 - name: NFS_PATH value: /data/share volumes: - name: nfs-client-root nfs: server: 192.168.100.23 path: /data/sharestorageclass-nfs.yaml

apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: storage-nfs provisioner: storage.pri/nfs reclaimPolicy: Deletemetrics-server.yaml

apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: metrics-server name: metrics-server namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: k8s-app: metrics-server rbac.authorization.k8s.io/aggregate-to-admin: "true" rbac.authorization.k8s.io/aggregate-to-edit: "true" rbac.authorization.k8s.io/aggregate-to-view: "true" name: system:aggregated-metrics-reader rules: - apiGroups: - metrics.k8s.io resources: - pods - nodes verbs: - get - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: k8s-app: metrics-server name: system:metrics-server rules: - apiGroups: - "" resources: - pods - nodes - nodes/stats - namespaces - configmaps verbs: - get - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: k8s-app: metrics-server name: metrics-server-auth-reader namespace: kube-system roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: extension-apiserver-authentication-reader subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: k8s-app: metrics-server name: metrics-server:system:auth-delegator roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:auth-delegator subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: k8s-app: metrics-server name: system:metrics-server roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:metrics-server subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: v1 kind: Service metadata: labels: k8s-app: metrics-server name: metrics-server namespace: kube-system spec: ports: - name: https port: 443 protocol: TCP targetPort: https selector: k8s-app: metrics-server --- apiVersion: apps/v1 kind: Deployment metadata: labels: k8s-app: metrics-server name: metrics-server namespace: kube-system spec: selector: matchLabels: k8s-app: metrics-server strategy: rollingUpdate: maxUnavailable: 0 template: metadata: name: metrics-server labels: k8s-app: metrics-server spec: containers: - args: - --cert-dir=/tmp - --secure-port=4443 - --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname - --kubelet-use-node-status-port # 跳过TLS证书验证 - --kubelet-insecure-tls image: jjzz/metrics-server:v0.4.1 imagePullPolicy: IfNotPresent livenessProbe: failureThreshold: 3 httpGet: path: /livez port: https scheme: HTTPS periodSeconds: 10 name: metrics-server ports: - containerPort: 4443 name: https protocol: TCP readinessProbe: failureThreshold: 3 httpGet: path: /readyz port: https scheme: HTTPS periodSeconds: 10 securityContext: readOnlyRootFilesystem: true runAsNonRoot: true runAsUser: 1000 volumeMounts: - mountPath: /tmp name: tmp-dir nodeSelector: kubernetes.io/os: linux priorityClassName: system-cluster-critical serviceAccountName: metrics-server volumes: - emptyDir: {} name: tmp-dir --- apiVersion: apiregistration.k8s.io/v1 kind: APIService metadata: labels: k8s-app: metrics-server name: v1beta1.metrics.k8s.io spec: group: metrics.k8s.io groupPriorityMinimum: 100 insecureSkipTLSVerify: true service: name: metrics-server namespace: kube-system version: v1beta1 versionPriority: 100

KubeKey 安装 KubeSphere-多节点

# 所有服务器都要执行这些:

关闭防火墙

关闭selinux

关闭swap分区

时间同步

hosts解析

内核参数设置

检查DNS

安装ipvs

安装依赖组件

安装、设置dockerm

#1. 所有节点关闭 SELinux

setenforce 0

sed -i --follow-symlinks 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/sysconfig/selinux

#2. 所有节点确保防火墙关闭

systemctl stop firewalld

systemctl disable firewalld

#3. 关闭swap分区

swapoff -a

echo "vm.swappiness=0" >> /etc/sysctl.conf

sed -i 's$/dev/mapper/centos-swap$#/dev/mapper/centos-swap$g' /etc/fstab

#4. 时间同步

yum -y install chrony

sed -i.bak '3,6d' /etc/chrony.conf && sed -i '3cserver ntp1.aliyun.com iburst' /etc/chrony.conf

systemctl start chronyd && systemctl enable chronyd

chronyc sources

#5. hosts解析

cat >>/etc/hosts<<EOF

192.168.110.100 master

192.168.110.101 node1

192.168.110.102 node2

EOF

#6. 内核参数设置

cat >/etc/sysctl.d/k8s.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

modprobe br_netfilter && sysctl -p /etc/sysctl.d/k8s.conf

#7. 检查DNS

cat /etc/resolv.conf

#8. 安装ipvs

## 向文件中写入以下内容

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

## 修改权限以及查看是否已经正确加载所需的内核模块

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

#9. 安装依赖组件

yum install -y yum-utils

yum -y install ipset ipvsadm

yum install -y ebtables socat ipset conntrack

-- 这里好像能直接kk无脑冲了,它ALL IN ONE 了

#10. 安装、设置dockerm

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum makecache fast

yum install -y docker-ce

systemctl enable docker && systemctl start docker

## 设置docker镜像加速器 && 修改docker Cgroup Driver为systemd

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://4puehki1.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

## 修改docker Cgroup Driver为systemd

#sed -i.bak "s#^ExecStart=/usr/bin/dockerd.*#ExecStart=/usr/bin/dockerd -H fd:// --#containerd=/run/containerd/containerd.sock --exec-opt native.cgroupdriver=systemd#g" #/usr/lib/systemd/system/docker.service

systemctl daemon-reload

systemctl restart docker

docker info|grep "Registry Mirrors" -A 1

## 安装k8s

cat <<EOF > kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

mv kubernetes.repo /etc/yum.repos.d/

yum install -y kubelet-1.22.10 kubectl-1.22.10

#11. 下载kubekey

# export KKZONE=cn

curl -sfL https://get-kk.kubesphere.io | VERSION=v2.3.0 sh -

chmod +x kk

## 导出配置

./kk create config --with-kubernetes v1.22.10 --with-kubesphere v3.3.1

## 修改配置后执行

./kk create cluster -f config-sample.yaml

## 检查安装日志

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l 'app in (ks-install, ks-installer)' -o jsonpath='{.items[0].metadata.name}') -fconfig-sample.yaml

改改hosts和roleGroups就行了

## openpitrix开启 apiVersion: kubekey.kubesphere.io/v1alpha2 kind: Cluster metadata: name: sample spec: hosts: - {name: master, address: 192.168.110.100, internalAddress: 192.168.110.100, user: root, password: "1234"} - {name: node1, address: 192.168.110.101, internalAddress: 192.168.110.101, user: root, password: "1234"} - {name: node2, address: 192.168.110.102, internalAddress: 192.168.110.102, user: root, password: "1234"} roleGroups: etcd: - master control-plane: - master worker: - node1 - node2 controlPlaneEndpoint: ## Internal loadbalancer for apiservers # internalLoadbalancer: haproxy domain: lb.kubesphere.local address: "" port: 6443 kubernetes: version: v1.22.10 clusterName: cluster.local autoRenewCerts: true containerManager: docker etcd: type: kubekey network: plugin: calico kubePodsCIDR: 10.233.64.0/18 kubeServiceCIDR: 10.233.0.0/18 ## multus support. https://github.com/k8snetworkplumbingwg/multus-cni multusCNI: enabled: false registry: privateRegistry: "" namespaceOverride: "" registryMirrors: [] insecureRegistries: [] addons: [] --- apiVersion: installer.kubesphere.io/v1alpha1 kind: ClusterConfiguration metadata: name: ks-installer namespace: kubesphere-system labels: version: v3.3.1 spec: persistence: storageClass: "" authentication: jwtSecret: "" zone: "" local_registry: "" namespace_override: "" # dev_tag: "" etcd: monitoring: false endpointIps: localhost port: 2379 tlsEnable: true common: core: console: enableMultiLogin: true port: 30880 type: NodePort # apiserver: # resources: {} # controllerManager: # resources: {} redis: enabled: false volumeSize: 2Gi openldap: enabled: false volumeSize: 2Gi minio: volumeSize: 20Gi monitoring: # type: external endpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090 GPUMonitoring: enabled: false gpu: kinds: - resourceName: "nvidia.com/gpu" resourceType: "GPU" default: true es: # master: # volumeSize: 4Gi # replicas: 1 # resources: {} # data: # volumeSize: 20Gi # replicas: 1 # resources: {} logMaxAge: 7 elkPrefix: logstash basicAuth: enabled: false username: "" password: "" externalElasticsearchHost: "" externalElasticsearchPort: "" alerting: enabled: false # thanosruler: # replicas: 1 # resources: {} auditing: enabled: false # operator: # resources: {} # webhook: # resources: {} devops: enabled: false # resources: {} jenkinsMemoryLim: 8Gi jenkinsMemoryReq: 4Gi jenkinsVolumeSize: 8Gi events: enabled: false # operator: # resources: {} # exporter: # resources: {} # ruler: # enabled: true # replicas: 2 # resources: {} logging: enabled: false logsidecar: enabled: true replicas: 2 # resources: {} metrics_server: enabled: false monitoring: storageClass: "" node_exporter: port: 9100 # resources: {} # kube_rbac_proxy: # resources: {} # kube_state_metrics: # resources: {} # prometheus: # replicas: 1 # volumeSize: 20Gi # resources: {} # operator: # resources: {} # alertmanager: # replicas: 1 # resources: {} # notification_manager: # resources: {} # operator: # resources: {} # proxy: # resources: {} gpu: nvidia_dcgm_exporter: enabled: false # resources: {} multicluster: clusterRole: none network: networkpolicy: enabled: false ippool: type: none topology: type: none openpitrix: store: enabled: false servicemesh: enabled: false istio: components: ingressGateways: - name: istio-ingressgateway enabled: false cni: enabled: false edgeruntime: enabled: false kubeedge: enabled: false cloudCore: cloudHub: advertiseAddress: - "" service: cloudhubNodePort: "30000" cloudhubQuicNodePort: "30001" cloudhubHttpsNodePort: "30002" cloudstreamNodePort: "30003" tunnelNodePort: "30004" # resources: {} # hostNetWork: false iptables-manager: enabled: true mode: "external" # resources: {} # edgeService: # resources: {} terminal: timeout: 600开启内置高可用模式

开启内置高可用模式,需要将

internalLoadbalancer字段取消注释。

config-sample.yaml文件中的address和port应缩进两个空格。负载均衡器默认的内部访问域名是

lb.kubesphere.local。6

Kubernetes1.25.4安装部署-单节点

1. 安装配置前提条件

kubeadm reset -f

modprobe -r ipip

rm -rf ~/.kube/

rm -rf /etc/kubernetes/

rm -rf /etc/systemd/system/kubelet.service.d

rm -rf /etc/systemd/system/kubelet.service

rm -rf /usr/bin/kube*

rm -rf /etc/cni

rm -rf /opt/cni

rm -rf /var/lib/etcd

rm -rf /var/etcd

yum clean all

yum remove kube* -y

# 配置镜像源

rm -f /etc/yum.repos.d/*.repo

cat > /etc/yum.repos.d/base.repo <<EOF

[base]

name=base

baseurl=https://mirrors.cloud.tencent.com/centos/\$releasever/os/\$basearch/

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-\$releasever

[extras]

name=extras

baseurl=https://mirrors.cloud.tencent.com/centos/\$releasever/extras/\$basearch/

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-\$releasever

[updates]

name=updates

baseurl=https://mirrors.cloud.tencent.com/centos/\$releasever/updates/\$basearch/

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-\$releasever

[centosplus]

name=centosplus

baseurl=https://mirrors.cloud.tencent.com/centos/\$releasever/centosplus/\$basearch/

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-\$releasever

[epel]

name=epel

baseurl=https://mirrors.cloud.tencent.com/epel/\$releasever/\$basearch/

gpgcheck=1

gpgkey=https://mirrors.cloud.tencent.com/epel/RPM-GPG-KEY-EPEL-\$releasever

EOF

# 安装必备工具

yum -y install vim tree lrzsz wget jq psmisc net-tools telnet yum-utils device-mapper-persistent-data lvm2 git

# 前置操作

systemctl disable --now firewalld

systemctl disable --now NetworkManager

setenforce 0

sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config

sed -ri 's/.*swap.*/#&/' /etc/fstab

swapoff -a

# 升级内核

-- 导入仓库源

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm

-- 查看可安装的软件包

yum --enablerepo="elrepo-kernel" list --showduplicates | sort -r | grep kernel-ml.x86_64

-- 选择 ML 或 LT 版本安装

# 安装 ML 版本

yum --enablerepo=elrepo-kernel install kernel-ml-devel kernel-ml -y

# 安装 LT 版本,K8S全部选这个

yum --enablerepo=elrepo-kernel install kernel-lt-devel kernel-lt -y

# 设置默认启动内核

grubby --default-kernel

awk -F\' '$1=="menuentry " {print $2}' /etc/grub2.cfg

grub2-set-default 0

grubby --default-kernel

reboot

# 安装ipvs相关工具

yum -y install ipvsadm ipset sysstat conntrack libseccomp

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack #内核小于4.18,把这行改成nf_conntrack_ipv4

cat >> /etc/modules-load.d/ipvs.conf <<EOF

ip_vs

ip_vs_lc

ip_vs_wlc

ip_vs_rr

ip_vs_wrr

ip_vs_lblc

ip_vs_lblcr

ip_vs_dh

ip_vs_sh

ip_vs_fo

ip_vs_nq

ip_vs_sed

ip_vs_ftp

ip_vs_sh

nf_conntrack #内核小于4.18,把这行改成nf_conntrack_ipv4

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

EOF

systemctl restart systemd-modules-load.service

# 开启一些k8s集群中必须的内核参数,master和node节点配置k8s内核:详情查看后记

cat > /etc/sysctl.d/k8s.conf <<EOF

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

EOF

sysctl --system

reboot

# 安装container

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

# 加载模块 - 必须执行,否则后面init集群的时候会报错

modprobe overlay

modprobe br_netfilter

# 配置Containerd所需的内核

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

# 加载内核 在不重启的情况下应用sysctl参数

# sysctl -p /etc/sysctl.d/k8s.conf

sysctl --system2. 安装 Containerd

yum安装:

# 配置软件源

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 查找可用的 containerd 包

yum search containerd

# 安装 containerd

yum install containerd.io -y二进制安装:

# 安装Containerd

wget https://github.com/containerd/containerd/releases/download/v1.6.10/cri-containerd-1.6.10-linux-amd64.tar.gz

tar xf cri-containerd-1.6.8-linux-amd64.tar.gz -C /3. 配置 containerd

mkdir -p /etc/containerd

containerd config default | tee /etc/containerd/config.toml

# 将Containerd的Cgroup改为Systemd和修改containerd配置sandbox_image 镜像源设置为阿里google_containers镜像源:

#使用下面命令修改

sed -ri -e 's/(.*SystemdCgroup = ).*/\1true/' -e 's@(.*sandbox_image = ).*@\1\"harbor.raymonds.cc/google_containers/pause:3.8\"@' /etc/containerd/config.toml

#如果没有harbor,请执行下面命令

sed -ri -e 's/(.*SystemdCgroup = ).*/\1true/' -e 's@(.*sandbox_image = ).*@\1\"registry.aliyuncs.com/google_containers/pause:3.8\"@' /etc/containerd/config.toml

# 配置镜像加速和配置私有镜像仓库

#使用下面命令修改

sed -i -e '/.*registry.mirrors.*/a\ [plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]\n endpoint = ["https://registry.docker-cn.com" ,"http://hub-mirror.c.163.com" ,"https://docker.mirrors.ustc.edu.cn"]\n [plugins."io.containerd.grpc.v1.cri".registry.mirrors."harbor.raymonds.cc"]\n endpoint = ["http://harbor.raymonds.cc"]' -e '/.*registry.configs.*/a\ [plugins."io.containerd.grpc.v1.cri".registry.configs."harbor.raymonds.cc".tls]\n insecure_skip_verify = true\n [plugins."io.containerd.grpc.v1.cri".registry.configs."harbor.raymonds.cc".auth]\n username = "admin"\n password = "123456"' /etc/containerd/config.toml

#如果没有harbor不需要设置私有仓库相关配置,只需要设置镜像加速,请使用下面命令执行

sed -i '/.*registry.mirrors.*/a\ [plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]\n endpoint = ["https://registry.docker-cn.com" ,"http://hub-mirror.c.163.com" ,"https://docker.mirrors.ustc.edu.cn"]' /etc/containerd/config.toml

# 配置crictl客户端连接的运行时位置

cat > /etc/crictl.yaml <<EOF

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

timeout: 10

debug: false

EOF

systemctl daemon-reload && systemctl enable --now containerd

### 查看信息

[root@k8s-master01 ~]# ctr version

Client:

Version: v1.6.8

Revision: 9cd3357b7fd7218e4aec3eae239db1f68a5a6ec6

Go version: go1.17.13

Server:

Version: v1.6.8

Revision: 9cd3357b7fd7218e4aec3eae239db1f68a5a6ec6

UUID: 18d9c9c1-27cc-4883-be10-baf17a186aad

[root@k8s-master01 ~]# crictl version

Version: 0.1.0

RuntimeName: containerd

RuntimeVersion: v1.6.8

RuntimeApiVersion: v1

[root@k8s-master01 ~]# crictl info

...

},

...

"lastCNILoadStatus": "cni config load failed: no network config found in /etc/cni/net.d: cni plugin not initialized: failed to load cni config",

"lastCNILoadStatus.default": "cni config load failed: no network config found in /etc/cni/net.d: cni plugin not initialized: failed to load cni config"

}#这里cni插件报错,不用管,因为没有装containerd的CNI插件,kubernetes里不需要containerd的CNI插件,装上了还会冲突,后边安装flannel或calico的CNI插件

# containerd 客户端工具 nerdctl

wget https://github.com/containerd/nerdctl/releases/download/v1.0.0/nerdctl-1.0.0-linux-amd64.tar.gz

tar xf nerdctl-1.0.0-linux-amd64.tar.gz -C /usr/local/bin/

#配置nerdctl

mkdir -p /etc/nerdctl/

cat > /etc/nerdctl/nerdctl.toml <<EOF

namespace = "k8s.io" #设置nerdctl工具默认namespace

insecure_registry = true #跳过安全镜像仓库检测

EOF

# 安装 buildkit 支持构建镜像

wget https://github.com/moby/buildkit/releases/download/v0.10.6/buildkit-v0.10.6.linux-amd64.tar.gz

tar xf buildkit-v0.10.6.linux-amd64.tar.gz -C /usr/local/

# buildkit 需要配置两个文件

cat > /usr/lib/systemd/system/buildkit.socket <<EOF

[Unit]

Description=BuildKit

Documentation=https://github.com/moby/buildkit

[Socket]

ListenStream=%t/buildkit/buildkitd.sock

SocketMode=0660

[Install]

WantedBy=sockets.target

EOF

cat > /usr/lib/systemd/system/buildkit.service << EOF

[Unit]

Description=BuildKit

Requires=buildkit.socket

After=buildkit.socket

Documentation=https://github.com/moby/buildkit

[Service]

Type=notify

ExecStart=/usr/local/bin/buildkitd --addr fd://

[Install]

WantedBy=multi-user.target

EOF

# 启动 buildkit

systemctl daemon-reload && systemctl enable --now buildkit

# WARNING!!

nerdctl version

Client:

Version: v1.0.0

OS/Arch: linux/amd64

Git commit: c00780a1f5b905b09812722459c54936c9e070e6

buildctl:

Version: v0.10.6

GitCommit: 0c9b5aeb269c740650786ba77d882b0259415ec7

Server:

containerd:

Version: v1.6.10

GitCommit: 770bd0108c32f3fb5c73ae1264f7e503fe7b2661

runc:

Version: 1.1.4

GitCommit: v1.1.4-0-g5fd4c4d1

### 我这里会报runc不存在

wget https://github.com/opencontainers/runc/releases/download/v1.1.4/runc.amd64

install -m 755 runc.amd64 /usr/local/sbin/runc

buildctl --version

buildctl github.com/moby/buildkit v0.10.6 0c9b5aeb269c740650786ba77d882b0259415ec7

nerdctl info

Client:

Namespace: k8s.io

Debug Mode: false

Server:

Server Version: v1.6.10

Storage Driver: overlayfs

Logging Driver: json-file

Cgroup Driver: cgroupfs

Cgroup Version: 1

Plugins:

Log: fluentd journald json-file syslog

Storage: native overlayfs

Security Options:

seccomp

Profile: default

Kernel Version: 5.4.224-1.el7.elrepo.x86_64

Operating System: CentOS Linux 7 (Core)

OSType: linux

Architecture: x86_64

CPUs: 2

Total Memory: 1.914GiB

Name: master

ID: 35d28da8-2531-4558-b16c-143d4b91e679

# nerdctl命令补全

yum -y install bash-completion

systemctl daemon-reload

systemctl enable --now containerd4. 验证 containerd

# 查看服务状态

systemctl status containerd

# 查看进程和端口

ps -ef | grep containerd

ss -ntlup | grep containerd

# 查看存储路径的内容

ls /data/containerd/5. 安装 kubeadm, kubelet, kubectl

# 配置软件源

cat > /etc/yum.repos.d/kubernetes.repo <<-EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-\$basearch

enabled=1

gpgcheck=1

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

# yum list kubelet --showduplicate

# yum install kubelet-1.24.0-0

# ps: 由于官网未开放同步方式, 可能会有索引gpg检查失败的情况, 这时请用 yum install -y --nogpgcheck kubelet kubeadm kubectl 安装

yum install kubelet kubeadm kubectl --nogpgcheck -y

# 启动 kubelet 服务并设置开机自启

systemctl daemon-reload

systemctl enable --now kubelet

kubeadm init \

--apiserver-advertise-address=192.168.100.110 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.25.4 \

--service-cidr=10.96.0.0/16 \

--pod-network-cidr=10.244.0.0/16

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 实现 kubectl 命令补全

yum -y install bash-completion

# 在bash shell 中永久的添加自动补全

echo "source <(kubectl completion bash)" >> ~/.bashrc

kubectl get nodes

# 很有可能国内网络访问不到这个资源,你可以网上找找国内的源安装 flannel

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

# kubectl delete -f xxxx.yaml

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml后记

Kubernetes内核优化常用参数详解:

net.ipv4.ip_forward = 1 #其值为0,说明禁止进行IP转发;如果是1,则说明IP转发功能已经打开。

net.bridge.bridge-nf-call-iptables = 1 #二层的网桥在转发包时也会被iptables的FORWARD规则所过滤,这样有时会出现L3层的iptables rules去过滤L2的帧的问题

net.bridge.bridge-nf-call-ip6tables = 1 #是否在ip6tables链中过滤IPv6包

fs.may_detach_mounts = 1 #当系统有容器运行时,需要设置为1

vm.overcommit_memory=1

#0, 表示内核将检查是否有足够的可用内存供应用进程使用;如果有足够的可用内存,内存申请允许;否则,内存申请失败,并把错误返回给应用进程。

#1, 表示内核允许分配所有的物理内存,而不管当前的内存状态如何。

#2, 表示内核允许分配超过所有物理内存和交换空间总和的内存

vm.panic_on_oom=0

#OOM就是out of memory的缩写,遇到内存耗尽、无法分配的状况。kernel面对OOM的时候,咱们也不能慌乱,要根据OOM参数来进行相应的处理。

#值为0:内存不足时,启动 OOM killer。

#值为1:内存不足时,有可能会触发 kernel panic(系统重启),也有可能启动 OOM killer。

#值为2:内存不足时,表示强制触发 kernel panic,内核崩溃GG(系统重启)。

fs.inotify.max_user_watches=89100 #表示同一用户同时可以添加的watch数目(watch一般是针对目录,决定了同时同一用户可以监控的目录数量)

fs.file-max=52706963 #所有进程最大的文件数

fs.nr_open=52706963 #单个进程可分配的最大文件数

net.netfilter.nf_conntrack_max=2310720 #连接跟踪表的大小,建议根据内存计算该值CONNTRACK_MAX = RAMSIZE (in bytes) / 16384 / (x / 32),并满足nf_conntrack_max=4*nf_conntrack_buckets,默认262144

net.ipv4.tcp_keepalive_time = 600 #KeepAlive的空闲时长,或者说每次正常发送心跳的周期,默认值为7200s(2小时)

net.ipv4.tcp_keepalive_probes = 3 #在tcp_keepalive_time之后,没有接收到对方确认,继续发送保活探测包次数,默认值为9(次)

net.ipv4.tcp_keepalive_intvl =15 #KeepAlive探测包的发送间隔,默认值为75s

net.ipv4.tcp_max_tw_buckets = 36000 #Nginx 之类的中间代理一定要关注这个值,因为它对你的系统起到一个保护的作用,一旦端口全部被占用,服务就异常了。 tcp_max_tw_buckets 能帮你降低这种情况的发生概率,争取补救时间。

net.ipv4.tcp_tw_reuse = 1 #只对客户端起作用,开启后客户端在1s内回收

net.ipv4.tcp_max_orphans = 327680 #这个值表示系统所能处理不属于任何进程的socket数量,当我们需要快速建立大量连接时,就需要关注下这个值了。

net.ipv4.tcp_orphan_retries = 3

#出现大量fin-wait-1

#首先,fin发送之后,有可能会丢弃,那么发送多少次这样的fin包呢?fin包的重传,也会采用退避方式,在2.6.358内核中采用的是指数退避,2s,4s,最后的重试次数是由tcp_orphan_retries来限制的。

net.ipv4.tcp_syncookies = 1 #tcp_syncookies是一个开关,是否打开SYN Cookie功能,该功能可以防止部分SYN攻击。tcp_synack_retries和tcp_syn_retries定义SYN的重试次数。

net.ipv4.tcp_max_syn_backlog = 16384 #进入SYN包的最大请求队列.默认1024.对重负载服务器,增加该值显然有好处.

net.ipv4.ip_conntrack_max = 65536 #表明系统将对最大跟踪的TCP连接数限制默认为65536

net.ipv4.tcp_max_syn_backlog = 16384 #指定所能接受SYN同步包的最大客户端数量,即半连接上限;

net.ipv4.tcp_timestamps = 0 #在使用 iptables 做 nat 时,发现内网机器 ping 某个域名 ping 的通,而使用 curl 测试不通, 原来是 net.ipv4.tcp_timestamps 设置了为 1 ,即启用时间戳

net.core.somaxconn = 16384 #Linux中的一个kernel参数,表示socket监听(listen)的backlog上限。什么是backlog呢?backlog就是socket的监听队列,当一个请求(request)尚未被处理或建立时,他会进入backlog。而socket server可以一次性处理backlog中的所有请求,处理后的请求不再位于监听队列中。当server处理请求较慢,以至于监听队列被填满后,新来的请求会被拒绝。配置镜像加速和配置私有镜像仓库:

[root@k8s-master01 ~]# vim /etc/containerd/config.toml

...

[plugins."io.containerd.grpc.v1.cri".registry]

...

#下面几行是配置私有仓库授权,如果没有私有仓库下面的不用设置

[plugins."io.containerd.grpc.v1.cri".registry.configs]

[plugins."io.containerd.grpc.v1.cri".registry.configs."harbor.raymonds.cc".tls]

insecure_skip_verify = true

[plugins."io.containerd.grpc.v1.cri".registry.configs."harbor.raymonds.cc".auth]

username = "admin"

password = "123456"

...

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

#下面两行是配置镜像加速

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

endpoint = ["https://registry.docker-cn.com" ,"http://hub-mirror.c.163.com" ,"https://docker.mirrors.ustc.edu.cn"]

#下面两行是配置私有仓库,如果没有私有仓库下面的不用设置

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."harbor.raymonds.cc"]

endpoint = ["http://harbor.raymonds.cc"]

...kubeadm init 命令参考说明

--kubernetes-version:#kubernetes程序组件的版本号,它必须要与安装的kubelet程序包的版本号相同

--control-plane-endpoint:#多主节点必选项,用于指定控制平面的固定访问地址,可是IP地址或DNS名称,会被用于集群管理员及集群组件的kubeconfig配置文件的API Server的访问地址,如果是单主节点的控制平面部署时不使用该选项,注意:kubeadm 不支持将没有 --control-plane-endpoint 参数的单个控制平面集群转换为高可用性集群。

--pod-network-cidr:#Pod网络的地址范围,其值为CIDR格式的网络地址,通常情况下Flannel网络插件的默认为10.244.0.0/16,Calico网络插件的默认值为192.168.0.0/16

--service-cidr:#Service的网络地址范围,其值为CIDR格式的网络地址,默认为10.96.0.0/12;通常,仅Flannel一类的网络插件需要手动指定该地址

--service-dns-domain string #指定k8s集群域名,默认为cluster.local,会自动通过相应的DNS服务实现解析

--apiserver-advertise-address:#API 服务器所公布的其正在监听的 IP 地址。如果未设置,则使用默认网络接口。apiserver通告给其他组件的IP地址,一般应该为Master节点的用于集群内部通信的IP地址,0.0.0.0表示此节点上所有可用地址,非必选项

--image-repository string #设置镜像仓库地址,默认为 k8s.gcr.io,此地址国内可能无法访问,可以指向国内的镜像地址

--token-ttl #共享令牌(token)的过期时长,默认为24小时,0表示永不过期;为防止不安全存储等原因导致的令牌泄露危及集群安全,建议为其设定过期时长。未设定该选项时,在token过期后,若期望再向集群中加入其它节点,可以使用如下命令重新创建token,并生成节点加入命令。kubeadm token create --print-join-command

--ignore-preflight-errors=Swap” #若各节点未禁用Swap设备,还需附加选项“从而让kubeadm忽略该错误

--upload-certs #将控制平面证书上传到 kubeadm-certs Secret

--cri-socket #v1.24版之后指定连接cri的socket文件路径,注意;不同的CRI连接文件不同

#如果是cRI是containerd,则使用--cri-socket unix:///run/containerd/containerd.sock #如果是cRI是docker,则使用--cri-socket unix:///var/run/cri-dockerd.sock

#如果是CRI是CRI-o,则使用--cri-socket unix:///var/run/crio/crio.sock

#注意:CRI-o与containerd的容器管理机制不一样,所以镜像文件不能通用。